Zusammenfassungen

Zusammenfassungen

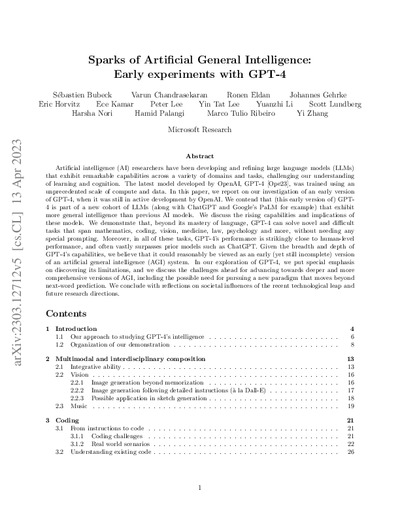

Artificial intelligence (AI) researchers have been developing and refining large language models (LLMs)

that exhibit remarkable capabilities across a variety of domains and tasks, challenging our understanding

of learning and cognition. The latest model developed by OpenAI, GPT-4 [Ope23], was trained using an

unprecedented scale of compute and data. In this paper, we report on our investigation of an early version

of GPT-4, when it was still in active development by OpenAI. We contend that (this early version of) GPT4 is part of a new cohort of LLMs (along with ChatGPT and Google’s PaLM for example) that exhibit

more general intelligence than previous AI models. We discuss the rising capabilities and implications of

these models. We demonstrate that, beyond its mastery of language, GPT-4 can solve novel and difficult

tasks that span mathematics, coding, vision, medicine, law, psychology and more, without needing any

special prompting. Moreover, in all of these tasks, GPT-4’s performance is strikingly close to human-level

performance, and often vastly surpasses prior models such as ChatGPT. Given the breadth and depth of

GPT-4’s capabilities, we believe that it could reasonably be viewed as an early (yet still incomplete) version

of an artificial general intelligence (AGI) system. In our exploration of GPT-4, we put special emphasis

on discovering its limitations, and we discuss the challenges ahead for advancing towards deeper and more

comprehensive versions of AGI, including the possible need for pursuing a new paradigm that moves beyond

next-word prediction. We conclude with reflections on societal influences of the recent technological leap and

future research directions.

Artificial intelligence (AI) researchers have been developing and refining large language models (LLMs)

that exhibit remarkable capabilities across a variety of domains and tasks, challenging our understanding

of learning and cognition. The latest model developed by OpenAI, GPT-4 [Ope23], was trained using an

unprecedented scale of compute and data. In this paper, we report on our investigation of an early version

of GPT-4, when it was still in active development by OpenAI. We contend that (this early version of) GPT4 is part of a new cohort of LLMs (along with ChatGPT and Google’s PaLM for example) that exhibit

more general intelligence than previous AI models. We discuss the rising capabilities and implications of

these models. We demonstrate that, beyond its mastery of language, GPT-4 can solve novel and difficult

tasks that span mathematics, coding, vision, medicine, law, psychology and more, without needing any

special prompting. Moreover, in all of these tasks, GPT-4’s performance is strikingly close to human-level

performance, and often vastly surpasses prior models such as ChatGPT. Given the breadth and depth of

GPT-4’s capabilities, we believe that it could reasonably be viewed as an early (yet still incomplete) version

of an artificial general intelligence (AGI) system. In our exploration of GPT-4, we put special emphasis

on discovering its limitations, and we discuss the challenges ahead for advancing towards deeper and more

comprehensive versions of AGI, including the possible need for pursuing a new paradigm that moves beyond

next-word prediction. We conclude with reflections on societal influences of the recent technological leap and

future research directions. Dieses Buch erwähnt ...

Dieses Buch erwähnt ...

Personen KB IB clear | Sandhini Agarwal , Dario Amodei , Amanda Askell , Emily M. Bender , Christopher Berner , Tom B. Brown , Mark Chen , Benjamin Chess , Rewon Child , Jack Clark , Kewal Dhariwal , Prafulla Dhariwal , Timnit Gebru , Aidan N. Gomez , Scott Gray , Tom Henighan , Ariel Herbert-Voss , Christopher Hesse , Llion Jones , Lukasz Kaiser , Jared Kaplan , Gretchen Krueger , Mateusz Litwin , Benjamin Mann , Sam McCandlish , Angelina McMillan-Major , Arvind Neelakantan , Helen Nissenbaum , Niki Parmar , Illia Polosukhin , Alec Radford , Aditya Ramesh , Nick Ryder , Girish Sastry , Noam Shazeer , Shmargaret Shmitchell , Pranav Shyam , Eric Sigler , Melanie Subbiah , Ilya Sutskever , Jakob Uszkoreit , Ashish Vaswani , Clemens Winter , Jeffrey Wu , Daniel M. Ziegler | |||||||||||||||||||||||||||

Aussagen KB IB clear | Computergenerierte Texte erhöhen die Informationsflut

Generative Machine-Learning-Systeme erleichtern das Generieren von Fake-News massiv Machine Learning kann bestehende Vorurteile/Ungerechtigkeiten verstärken/weitertragen | |||||||||||||||||||||||||||

Begriffe KB IB clear | AGI

,  Chat-GPT

, Chat-GPT

,  Generative Machine-Learning-Systeme (GMLS) Generative Machine-Learning-Systeme (GMLS) computer-generated text

, computer-generated text

,  Generative Pretrained Transformer 4 (GPT-4)

, Halluzination

, Generative Pretrained Transformer 4 (GPT-4)

, Halluzination

,  Künstliche Intelligenz (KI / AI) Künstliche Intelligenz (KI / AI) artificial intelligence

, artificial intelligence

,  Mathematik Mathematik mathematics

, mathematics

,  Psychologie Psychologie psychology

, Theory-of-Mind (TOM)Theory-of-Mind psychology

, Theory-of-Mind (TOM)Theory-of-Mind

| |||||||||||||||||||||||||||

Bücher |

| |||||||||||||||||||||||||||

Texte |

|

Dieses Buch erwähnt vermutlich nicht ...

Dieses Buch erwähnt vermutlich nicht ...

Nicht erwähnte Begriffe | Generative Pretrained Transformer 3 (GPT-3), GMLS & Bildung, GMLS & Schule |

Tagcloud

Tagcloud

Zitationsgraph

Zitationsgraph

Zitationsgraph (Beta-Test mit vis.js)

Zitationsgraph (Beta-Test mit vis.js)

Zeitleiste

Zeitleiste

8 Erwähnungen

8 Erwähnungen

- Generative AI at Work (Erik Brynjolfsson, Danielle Li, Lindsey R. Raymond) (2023)

- Hey Siri, vernichte uns! (Jannis Brühl) (2023)

- The Future of AI in Education - 13 Things We Can Do to Minimize the Damage (Arran Hamilton, Dylan Wiliam, John Hattie) (2023)

- The Coming Wave - Technology, Power, and the Twenty-first Century's Greatest Dilemma (Mustafa Suleyman, Michael Bhaskar) (2023)

- Künstliche Intelligenz, Large Language Models, ChatGPT und die Arbeitswelt der Zukunft (Michael Seemann) (2023)

- Künstliche Intelligenz - Dem Menschen überlegen - wie KI uns rettet und bedroht (Manfred Spitzer) (2023)

- Alles überall auf einmal - Wie Künstliche Intelligenz unsere Welt verändert und was wir dabei gewinnen können (Miriam Meckel, Léa Steinacker) (2024)

- The impact of generative artificial intelligence on socioeconomic inequalities and policymaking (Valerio Capraro, Austin Lentsch, Daron Acemoglu, Selin Akgun, Aisel Akhmedova, Ennio Bilancini, Jean-François Bonnefon, Pablo Brañas-Garza, Luigi Butera, Karen Douglas, Jim Everett, Gerd Gigerenzer, Christine Greenhow, Daniel Hashimoto, Julianne Holt-Lunstad, Jolanda Jetten, Simon Johnson, Werner Kunz, Chiara Longoni, Pete Lunn, Simone Natale, Stefanie Paluch, Iyad Rahwan, Neil Selwyn, Vivek Singh, Siddharth Suri, Jennifer Sutcliffe, Joe Tomlinson, Sander van der Linden, Paul Van Lange, Friederike Wall, Jay Van Bavel, Riccardo Viale) (2024)

Co-zitierte Bücher

Co-zitierte Bücher

Language Models are Few-Shot Learners

(Tom B. Brown, Benjamin Mann, Nick Ryder, Melanie Subbiah, Jared Kaplan, Kewal Dhariwal, Prafulla Dhariwal, Arvind Neelakantan, Pranav Shyam, Girish Sastry, Amanda Askell, Sandhini Agarwal, Ariel Herbert-Voss, Gretchen Krueger, Tom Henighan, Rewon Child, Aditya Ramesh, Daniel M. Ziegler, Jeffrey Wu, Clemens Winter, Christopher Hesse, Mark Chen, Eric Sigler, Mateusz Litwin, Scott Gray, Benjamin Chess, Jack Clark, Christopher Berner, Sam McCandlish, Alec Radford, Ilya Sutskever, Dario Amodei) (2020)

Volltext dieses Dokuments

Volltext dieses Dokuments

|  Sparks of Artificial General Intelligence: Gesamtes Buch als Volltext ( Sparks of Artificial General Intelligence: Gesamtes Buch als Volltext ( : :  , 7198 kByte; , 7198 kByte;  : :  ) ) |

Anderswo suchen

Anderswo suchen

Beat und dieses Buch

Beat und dieses Buch

Beat hat dieses Buch während seiner Zeit am Institut für Medien und Schule (IMS) ins Biblionetz aufgenommen. Beat besitzt kein physisches, aber ein digitales Exemplar. Eine digitale Version ist auf dem Internet verfügbar (s.o.). Es gibt bisher nur wenige Objekte im Biblionetz, die dieses Werk zitieren.

Biblionetz-History

Biblionetz-History